What is Audio Phase

Introduction

When processing an audio signal, have you ever encountered a situation in which it does not sound right regardless of what you do? For some reason, despite the fact that everything appears to work in theory, the sound just seems thin and uninspiring.

When we refer to the audio phase in the audio part of music production, we are referring to the sound relationships between sounds. Depending on how two instruments play the same note or how two recordings of the same instrument sound, this can be categorized as a musical relationship.

There is the possibility that you are simply working with a poor-quality recording, but it is more likely that you have an unsuspected phase issue lurking in your mix.

Our focus in this article will be on defining the phase, when and why it can be beneficial and when it can be problematic, and how to manage it when it falls into the latter category. Let us then dive in and resolve this phase issue once and for all.

What is Audio Phase?

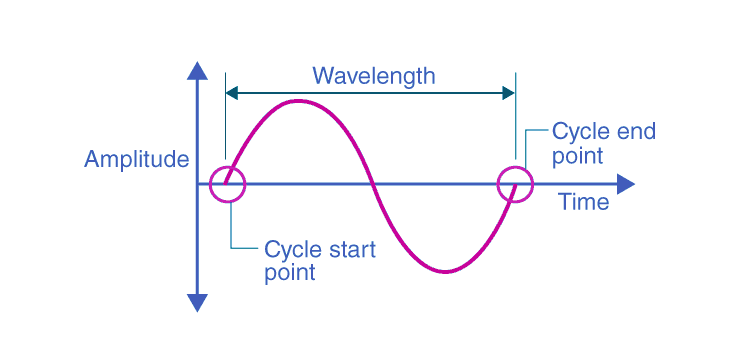

When it comes to audio, phase refers to the position in a time of a sound with identical waveforms. Imagine a sine wave plotted on a graph. Phase is defined as the position of the wave along the x-axis. Periodic waveforms indicate where the pattern of the wave begins along its shape through its phase. Periodic sounds are characterized by the same wave shape repeated over and over again.

An audio phase can be defined as the point in time within a sound wave where a sound is being produced. There are three components that make up a sound wave: its amplitude, its wavelength, and its frequency.

Amplitude refers to how loud the wave is at a particular time; wavelength corresponds to the distance between two equal amplitudes along the cycle of a perfectly symmetrical and repetitive sound wave; frequency (also known as pitch) refers to the number of times per second the same sound wave repeats itself.

Sound waves are identified by their phase, which tells us precisely where we are on the whole wave cycle itself. It is the relationship between two or more sound waves that are really important in audio production; the absolute phase of a single sound wave does not really matter for reasons that will be discussed shortly.

In essence, everything we hear is a sound wave, or more precisely the vibration of air. When we import or record any piece of audio, these waves appear visually as peaks and troughs in our digital audio workstations.

During peaks, the cone of the speaker will be moved in one direction, whereas during troughs, it will be moved in the opposite direction. As a result, the speaker becomes “confused” when the phase differences between two signals are not aligned with each other. As a result, the energy of the sound decreases when the two sound waves are not aligned (when they are out of phase), and increases when they are aligned (when they are in phase).

What is Phase Cancellation?

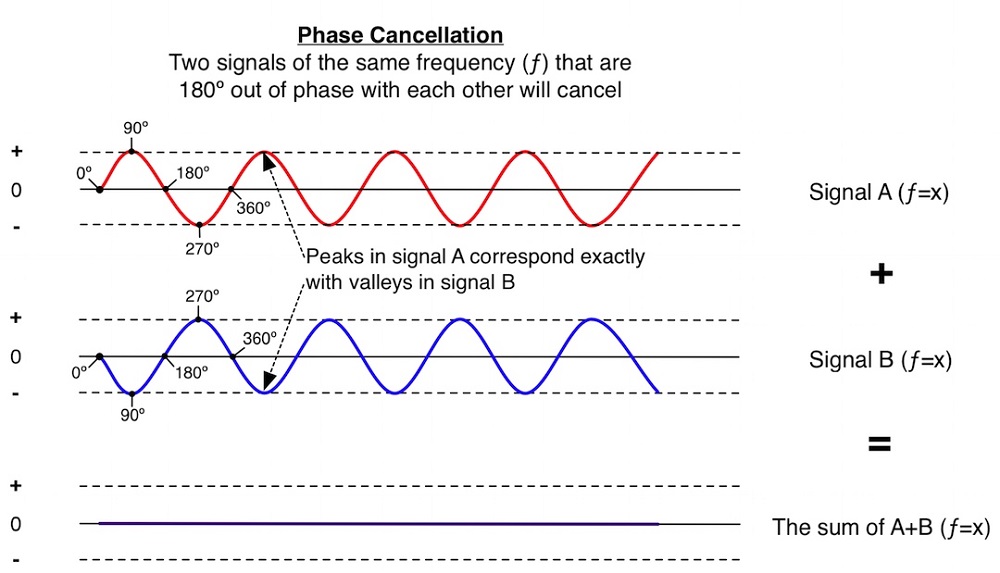

The phase of the two sources is determined by the difference in their amplitude and time. The movement of sound waves is composed of both positive and negative movements, similar to vibrations.

If you observe a speaker cone in slow motion, you will observe that the cone moves back and forth, causing a change in air pressure, which is interpreted by the human ear as sound. The phase cancellation process occurs when two sine waves of the same frequency are not received at the same time by the pickup source, thus resulting in a reduced sound level of the summed signals.

There is an inconsistency between the peaks and valleys of the graph, which results in them working against each other rather than supporting one another. In this situation, the frequencies cancel each other out and the sound is weak acoustically.

It is possible to achieve phase cancellation with pro audio either through electricity, by improper wiring resulting in out-of-phase electrical signals, or through acoustic means by off-phasing the sonic waves of multiple tracks. For the purposes of this article, we will concentrate on the acoustic aspect of phase cancellation, since electrical phase cancellation requires a deeper understanding of technical terms.

How Does the Audio Phase Affect Music?

From subtle changes in sound to complete sonic mayhem, phase can be used to create various effects in music. Music producers who are serious about their work should have this knowledge. The audio phase can be used in music production in a few different ways.

The effect can be used in a variety of ways, including for widening stereo effects. In order to create a wide and expansive sound, you can use two signals that are out of phase with one another. Using the audio phase will allow you to create a fuller sound if the sound is too quiet or thin. An example of using a phase plugin would be to thicken up a recording of a bass guitar if it sounds too thin.

A sense of depth and space is created in music by using the audio phase. A three-dimensional soundscape can be perceived by the brain by delaying certain frequencies. Using this technique can enhance the immersive effects of music and also allow the mix to be more effective; since it prevents sounds from competing for the same amount of space.

In addition, the audio phase is also useful for creative purposes. There is a wide range of interesting effects that can be achieved by delaying one signal relative to another. Sound effects can be created or depth and dimension can be added to your tracks by using this technique. Using phase in creative ways can produce a spacey and sometimes robotic sound.

Fixing Phasing Issues

Microphone Placement

Sometimes, professional engineers use a length of cable when setting up microphones to make measurements. To diminish the effects of bad phase relationships, they use the 3:1 technique, which is an eyeball technique.

As a general rule of thumb, additional microphones should be placed three times as far away from the source as the first. As an example, imagine that you are using your main microphone to record an acoustic guitar from a distance of 4 inches. There should be a distance of 12 inches (3 times the original distance) between the second microphone and the first microphone.

The case may be that the problem does not manifest until you are mixing, in which case you can usually pull up the tracks in your DAW, zoom in close on the two waveforms together, and slightly nudge one track in the right direction. Changing one or two milliseconds can have a tremendous impact on the performance of a track.

Fix the Problem from the Beginning

In order to resolve phase issues, it is best to prevent them from occurring in the first place. Microphone preamps and audio interfaces feature toggles with a diameter symbol, just as utility plugins in your digital audio workstation. A simple phase shift sometimes can solve problems.

With this feature, you are able to invert the polarity of the signal by 180 degrees. When attempting to determine whether two signals are out of phase, it is recommended that you press it and listen carefully to the result.

If engaging the Ø switch results in a significant amount of low-end and punch, your signals may be out of phase. During recording, you may encounter several situations where the phase of the signal is out of phase by approximately 180 degrees.

Snare drum recordings are most commonly conducted using both top and bottom microphones. There will be an out-of-phase sound wave propagating from the two heads at the top and bottom. When recording both sides of the snare, be sure to check your phase.

Plug-ins for Automatic Alignment

A plugin that can automatically align samples with live drums may also be useful when layering samples with live drums. It can be helpful to use an auto-alignment plugin when enhancing live drums with samples because you need the sample to match something that was played live with micro variations in timing.

The good news is that phase-fixing implements can be found in abundance today. In-Between Phase by Little Labs and Eventide Precision Time Align are some of the best examples.

If you are able to recognize phase problems with your ear, simply moving the left and right channels can also resolve phase problems. You should keep in mind that this trick will not always work, especially if you are following the grid strictly.

Manual Fix

The advantage of this method is that you are fully in control of the sound of your track, so we favor it over other methods. It is possible to align tracks by dragging them backward or forwards until their peaks and troughs correspond to one another, thereby achieving a better phase relationship.

Even if your tracks are perfectly aligned, you may not get the best phase response, but you may in most cases. Its strength lies in the ability to fine-tune the phase response until the desired low-end punch is achieved or a track is balanced to the highest degree.

When it comes to this issue, there are no hard and fast rules. Until you are satisfied that you have achieved the best balance in your mix, you move the track until you achieve the desired effect.

Conclusion

In this case, you have a clear understanding of both what phase is in a technical sense as well as how it relates to polarity. Additionally, you have the ability to think about how phase relationships relate to time delay and stereo in both stereo and mono contexts, and to understand how they are intertwined. Our discussion covered when it is appropriate to address phase issues, and when it is not, as well as four methods for addressing phase issues.

As you become more familiar with the audio phase, you will be able to mix much better and you will encounter fewer surprises when collapsing stereo tracks into mono. Therefore, you should be vigilant, make good use of the knowledge and tools available to you, and avoid getting dazzled by the phase.

If in case you have any other questions in regard to our topic here, please let us know in the comments and we can further explain any other points that you have. Until next time!