What Is Audio Normalization

Introduction

Normalizing audio is the process of bringing the recorded amplitude to a target or norm by applying a constant amount of gain to the recording. In other words, normalizing an audio file with a normalizer software identifies a peak level of volume in the file to define a maximum level for the rest of the audio, and then increases the rest of the audio to the same level as the peak volume.

You should consider audio normalization in order to ensure that your video has a better balance of audio, especially for dialogue clips that stand out on YouTube and other social media platforms. It is still common, however, to obtain optimal audio results using old-fashioned AD/DA converters or matching a group of audios on the same volume level, due to many different reasons.

The question remains, what does audio normalization mean? What are the best methods for normalizing these digital audio files? The purpose of this article is to discuss audio normalization, so continue reading.

Two Types of Audio Normalization

Peak Normalization

As a reference point for normalization, peak normalization considers the level of signal that is present at the highest point in the recording. Suppose, for example, that the loudest part of a song is -6 decibels, then the entire song will be boosted by 6 decibels.

Taking a 0 dB target level as an example, this is what you should achieve. The type of normalization used in this case would be if you wanted to normalize each track in a session separately. It is probably safe to say that peak is the most popular method for normalizing audio.

Loudness Normalization

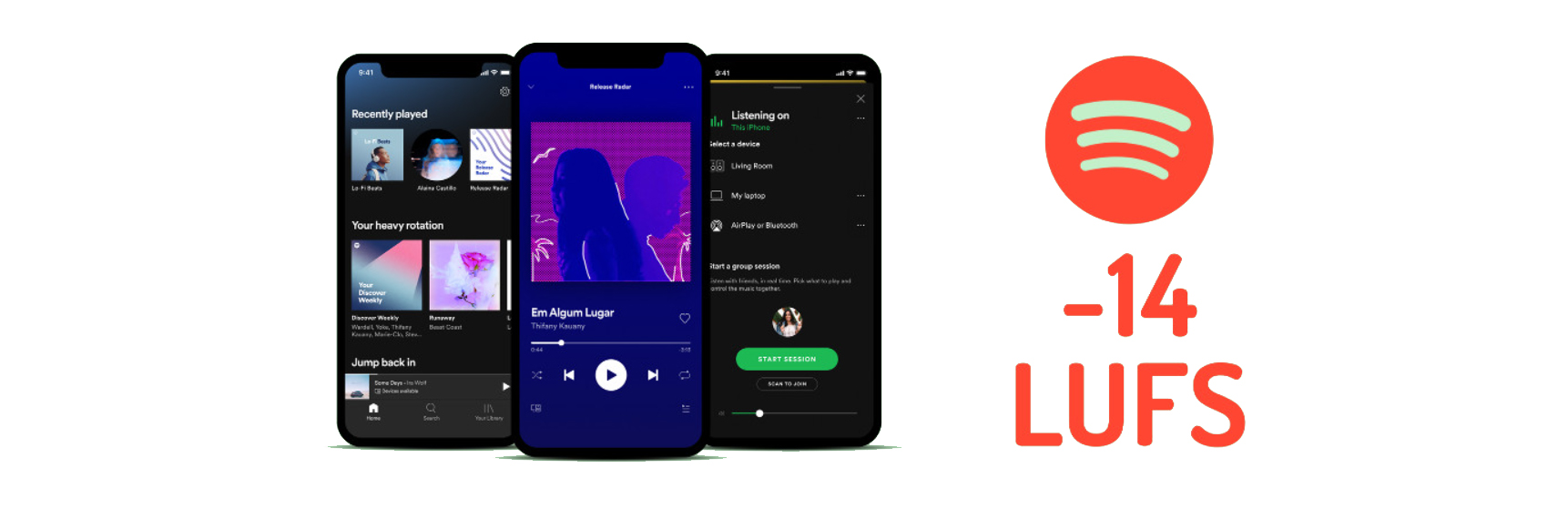

In order to normalize loudness, we use the overall measurement of loudness in a recording. In audio recordings, the volume is adjusted so that the overall gain of digital recording is brought to a specified level. Several different measurements may be used to assess this, including RMS, but LUFS has become the industry standard in the modern environment.

Audio mastering sometimes involves the normalization of loudness without the mastering engineer being aware that this is occurring. Audio on streaming services and platforms is a common place to find loudness normalization.

Spotify, for example, uses a standard of -14 LUFS. Their policy is to turn down 4 dB a song if it is at -10 LUFS. In this way, you do not have to change the volume of your playback device constantly when listening to songs from different artists.

Why Normalize Audio?

It has been known for a long time that digital audio has a normalization effect, but opinions in the music community are still divided. In one group, normalizing your audio can degrade its quality; in another, it can be a useful tool for improving the quality of your audio. What is the purpose of normalizing audio and when should it be done?

For the purpose of matching the volume levels between clips: Say that you wish to have all segments of your program at the same volume level, for example, an intro music segment, a narrated segment, and an interview segment. In order to make sure one is not too loud or soft, you can use the normalize tool. Voiceovers should be recorded at a volume that is not louder than the clip preceding them, and vice versa.

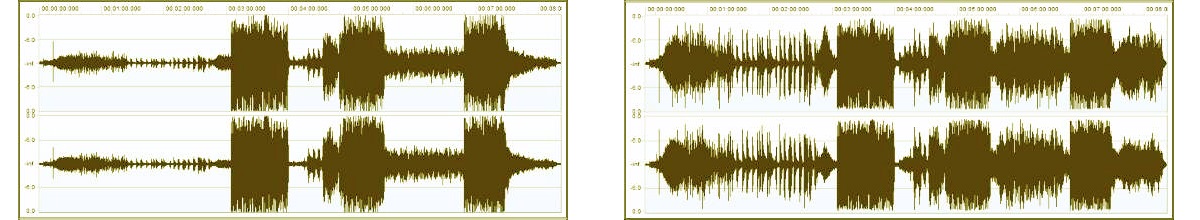

To increase the volume of the original file: The audio recording may also need to be normalized if it is not loud enough. There is a possibility that you may not be able to see the waveforms or hear the content if the volume is too low. This makes editing almost impossible. By utilizing the amplify effect, you will be able to resolve these issues quickly.

Audio normalization is a topic that beginners commonly bring up when they encounter it. Several of them claim that normalization can cause the audio to sound significantly different or degraded. It was actually a problem approximately three decades ago, as a result of the processing algorithms used at the time. The problem has been resolved today.

It is important to utilize the normalize effect in a wise manner. When it comes to certain situations, it is best to refrain from using them because there are better ways to achieve the same results. Automation, clip gain, or plugins can sometimes be used to modify the volume of a signal.

Manual Normalization

When it comes to using the normalization process when leveling out a performance, the first thing that always crosses our mind is “well this is a one-click process and I should do it”. Well, there is one other way to do this and we prefer to do this the hard way.

If you are having a quiet audio file and you want to max it out, you can do it from the editing window in your DAW and bring it up as much as you want and need. We often suggest not going for the maximum volume and leveling it accordingly to the other signals you have in your arrangement.

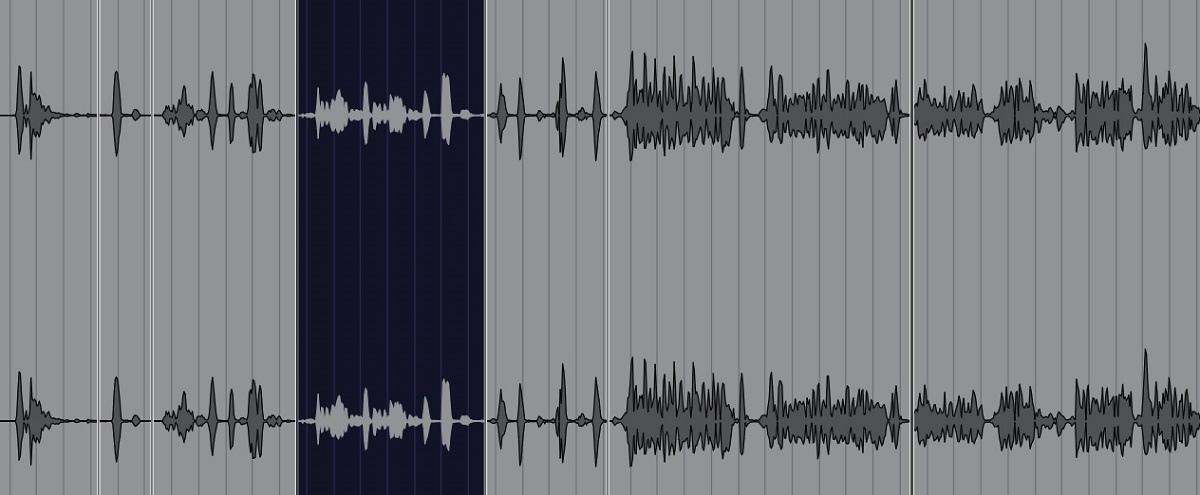

This is the easy step actually. We always reach this manual normalization when we have an uneven vocal signal. Let’s say we have rap vocals where the performance needs to be even. You can do the old and trustworthy phrase-by-phrase normalization, where you manually cut the points between the quiet and loud parts of the performance.

After that, what you only need to do is to even out them and have equal peaks, and aim for the power of the phrases to be in the average volume. This is a long process where you need to go through the recording several times to even it out, but it’s all worth it in the end. You will end up with an equal recording and the audio signal will be ready for further processing.

Audio Normalization Cons

It is important to keep in mind that audio normalization also has some disadvantages. It is generally recommended that you only perform normalization at the end of the recording process. You do not need to normalize any individual audio clips that have to be mixed in order to record a multitrack recording. You will experience clipping when you play together digital audio components that have been normalized.

There are some destructive characteristics associated with normalization. An audio track will be able to integrate a digital process as you normalize your audio. Therefore, normalization takes place in a specific location and at a specific time. This means that normalization should be performed only after you have processed your audio files to your satisfaction.

Peak normalization is typically used on audio clips to display the waveform on screens. You should not do that, and your program should allow you to make the waveforms much larger without permanently altering the audio file.

Aside from that, you may be able to use virtual normalization within various media players when you are using audio editing software for matching volume levels between finished tracks. In this case, the goal is to play different tracks of music simultaneously without altering the original file.

They determine how much to tone down music in a given file by measuring the EBU R 128 and RMS volumes of the files. Although this may not be an optimal option, it is interesting to hear different songs at the same volume.

Compression and Normalization Comparison

Many people mistakenly believe that compression and normalization are the same things, but that is not true. As a result of compression, the lowest volume of a track is boosted, whereas the peaks of the track are lowered, thus resulting in a more consistent volume level throughout the track. A normalization process, on the other hand, sets the loudest set point as the ceiling for your audio recording.

The remaining audio is then clipped with a proportionate amount of gain, which preserves the dynamic range of the audio, effectively raising the perceived volume depending on human perception of how loud or soft the loudest sounds are.

As a result of audio normalization, the volume of sounds is only and purely changed by applying a constant amount of gain, and the objective is to make the loudest peak reach 0 decibels. Unlike compression, normalization does not affect the sound dynamics of multiple audio recordings.

Audio compression, on the other hand, chops off the peaks in your recording in order to obtain a fuller, louder sound without exceeding the clipping limit. By compressing audio, you change a portion of the audio over time at varying rates.

Conclusion

Any audio engineer or producer should be familiar with the process of normalizing. In spite of the fact that it is an effective tool, you might also end up abusing it, which will result in a loss of quality. This will enable you to use normalization with caution as you will understand the differences between RMS and peak volume.

Be careful of increasing the signal-to-noise ratio since you are bringing everything up and you will probably be good. Trust your ears and analyze the signal after it is processed. We also suggest performing the gain staging process first and then reaching out for normalization, since that might solve the problem in the first place.

So if you have any additional questions about the topic here, feel free to let us know in the comments below and we can elaborate it further after that!